This has been ported over to my GitHub site and is not longer being maintained here. For any issues, comments or updates head here.

In a previous post I looked at coming up with a process for determining XOR keys that were 256 bytes. I've received and have read some great feedback/posts regarding the tool and even though I wrote it in such a way to try and still possibly see patterns/repetitive bytes for smaller XOR keys, that wasn't its purpose. There are plenty of other tools out there to try and assist oneself when dealing with XOR'ed files, however, recently a co-worker and I were left unsuccessful after exhausting those resources.

I'm often asked to look at some artifact that's believed to be encoded in some fashion or hear that even if something is XOR'ed that they wouldn't know how to go about decrypting/decoding it. I'm by no means an expert and sometimes find myself just as lost as you might feel but I thrive on learning and challenges, hence why I decided to work in the dfir space.

I believe this type of scenario is just like most others - the more time you spend doing it, the easier it becomes. Additionally, pattern recognition is key when it comes to XOR (pun intended). Determining the XOR key and any other skips etc. that might be used can be quite trivial, but let's look at a few ways that make this type of scenario harder:

- You don't have access to the source code of the file responsible for performing the XOR

- You don't have access to the binary responsible for performing the XOR

- You don't have the knowledge/skills/resources

- The key you think should work isn't working

In a recent blog post there was mention of the malware named XtremeRAT and additionally a few tools to help in scenarios where you're investigating incidents involving it. One of the scripts listed there is for decrypting a keylog file created from XtremeRAT with a two byte XOR key of '3fa5'. While it's helpful to know that two byte XOR key is used, what if it doesn't work on your file (bullet point number 4 mentioned above)? Or what if there's a new variant using a different XOR key that you now need to try and figure out? To try and solve these questions I decided to leverage a combination of YARA, the script xortools from Malware Analysts Cookbook (the book that keeps on giving) and use case examples from some others within the YaraExchange. Xortools has some useful functions for creating different XOR's, permutations and then spitting them out into YARA rules... sweet, right?

The functions within xortools didn't quite have a solution for what I was trying to do but some quick modifications to a couple of them was easy enough to implement. Let's break down the thought process:

- I wanted to generate a list of all possible combinations of two byte XOR keys (e.g. - 1010, 1011, 1012 etc.).

- Using those combinations I then wanted to XOR a string of my choosing

- With the resulting XOR'ed string I wanted to create a YARA rule for their hex bytes.

- I also wanted to keep track of the two byte XOR key being used for each rule and add them to the rules name so if/when a rule triggers, the XOR key is easily identifiable - this wasn't currently included in xortools so see my modified functions

- Wash, Rise, Repeat.... this would entail creating different strings that you wanted XOR'ed. I have a list that I usually feed to xorsearch such as 'http', 'explorer', 'kernel32' but in this particular instance I needed a list of strings that were likely to appear in a keylog file, such as:

- Backspace

- Delete

- CLIPBOARD

- Arrow Left

- Arrow Right

- Caps Lock

- Left Ctrl

- Right Ctrl

For some additional hints on what you might see within a keylog file, check out Ian's YARA rule for DarkComet_Keylogs_Memory.

Good thought process thus far, but what if those strings aren't contained within the keylog file? You wouldn't necessarily know unless you've previously dealt with this malware or have come across an example online...so another approach to think about is what is likely to be recorded on the system? Here are some examples I've found helpful:

- Company name (most likely keylogged email and/or Internet browsing)

- The persons name/user name

- Microsoft

This should help make things more flexible and tackling the unknown aspect.

First things first... create a function to generate every combo of two byte XOR keys:

The top is the original and the bottom is an example of how to generate the pair by adding another loop and at the end saving the two byte key for use in the rule name. Note: Doing it this way may produce hex characters that are only a nibble and YARA will not like that if you're trying to match on hex characters so to circumvent it, I decided to add a wild card (?) as the other nibble.

Next, we need to feed those two bytes to an XOR function and XOR the string we passed it. Finally, leverage the 'yaratize' function to create the YARA rule. I got things working and when I went to scan the XOR'ed keylog files I received 'Error 25' from YARA (sad face). After some troubleshooting I was told this issue was being caused by having too many strings in a single rule. Essentially, Error 25 'ERROR_EXEC_STACK_OVERFLOW' meant I was hitting a hard limit on the stack size. No bueno... My options were to tweak line 24 in libyara/exec.c or create better YARA rules. By creating so many strings and using the pre-existing 'yaratize' function within xortools my rule looked followed this structure:

You'll notice it's the standard rule format most of you are probably familiar with seeing: rule name followed by the strings to match and at the bottom (not shown) would be the condition. After some testing I determined that ~16k strings to match on seemed to be the limit that YARA would accept in a single rule (that's based on my systems config. + length of string to match etc.). Back to my options - I could tweak that setting in YARA which I didn't want to, have a counter and only add X amount of strings to match per rule or the third option of creating one rule per string. The third might not be familiar to some of you but that's what I opted to go with. It creates a larger file because of all the extra characters you're adding but with the new version of YARA, performance shouldn't really be too much of a factor. An example of this type of format is:

Now that this hurdle was bypassed, I was able to use the YARA rules generated. On a test file that I XOR'ed with the key '3fa5' the YARA rules worked ...however, they still weren't working on the keylog files from XtremeRAT - Err!

Note: the (-s) switch to YARA tells it to print out what matched, which is important here because our string name has the XOR key in it and the (-f) switch tells it to use fast matching mode, which only prints out the first match in the file instead of every time it's matched.

Alright, so let's pop open the XOR'ed test file I created and check out its hex and compare it to what I was seeing in the XtremeRAT files:

Here's what the test file looks like XOR'ed and in plain text, respectively:

And here is an image of the first 10 lines of two keylog files from XtremeRAT. If you scroll through this example you'll notice the first file has a second byte consistently of '00' while the second file has a second byte consistently 'a5':

If you've read anything on XOR'ing before you may be aware that XOR keys can present themselves based on what they're XOR'ing (hence why sometimes they have skips/checks implemented). Focusing on the bottom file, I'd say 'a5' is part of the XOR key - if not the key itself (depends on the length you're dealing with). Circling back to the XtremeRAT blog post we know a common key is '3fa5' so it appears we're being presented with half the key when we browse through the XOR'ed keylog file. Now if you recall back to previous YARA rules being created, I was producing a straight two byte XOR without any skips... if you look at the above files you'll realize, or maybe after some troubleshooting, that this conversion won't work in this instance as the keylog file doesn't have each byte sequentially (e.g - If the word within the keylog file we're looking for is 'Microsoft', the keylog file doesn't show it as that word XOR'ed in order, but rather with 'a5' in between each XOR'ed character.) Hm, what's happening? According to the blog post,

"XtremeRAT's key scheduling algorithm (KSA) implementation contains a bug wherein it only considers the length of the key string, not including the null bytes between each character, as found in these Unicode strings".

Now without having the binary or source code to make that determination (which I didn't), it should still become evident if you try and do a comparison:

When stuck or first starting off with something like this you can reference online tables or use online systems to see binary/decimal/hex conversions but it might be worth while figuring out how to do it programmatically in something you feel comfortable with - python, perl, bash, M$ Excel etc. to try and see what's going on.

Below is another copy of the same exact table shown above, but this time with two columns highlighted. The top column helps show each character within the string 'Microsoft' as its value in hex once it's XOR'ed with the single byte key of '3f'. The bottom column contains the same information, but has the second half of the XOR key 'a5' inserted in between each of the strings characters.

In other words? - Because XtremeRAT uses a two byte XOR key and has null bytes in between each character, the second part of the two byte XOR key 'a5' is always displayed. Essentially, it becomes a one byte XOR key as each character is always XOR'ed with the first half of the XOR key '3f'. So how do we compensate for this? After generating the permutations for every two byte XOR key we just read each character one at a time from the string we supply it then XOR each of them with the first half of the two byte key and add the second half of the two byte key right after it as itself (represented in the bottom blue column above).

Once we do that, bingo! :

We first see what the new YARA rule for '3fa5' looks like (which as the second byte as itself 'a5') and first see that it doesn't match on a file that's XOR'ed normally with the two byte key '3fa5' and lastly that it now matches on a keylog file XOR'ed from XtremeRAT with the added null byte routine.

So how easy is it to code? Pretty easy since the majority of it existed, just some slight modifications and you're good to go. You just need to modify the permutations function to generate combos of two byte XOR keys:

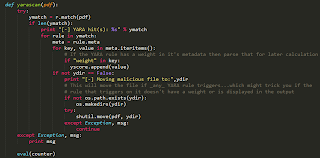

and push them over to the xor routine of need:

and finally to a function to create the YARA rules:

Other than that, just import the required functions and supply them with the required data; so for the modified functions I created, I could just say:

and voila, game over. This should hopefully have helped explain a little more on what XOR is, how to go about detecting it and another resource you can use in the future for trying to brute force what a possible XOR key is based on some common strings that might be present. Since xortools is hosted on Google code I opted to put up a modified version on my github instead of just a patch. I'm not the original author of all the code, just a guy modifying as needed.